optim4ai

Optimisation for Artificial Intelligence, a 4-day course

Introduction to tensors

« Previous | Up ↑ | Next »

In the previous section, we used NumPy to manipulate arrays of float and SciPy for optimisation methods after we gave a shot at implementing basic gradient descent me

-

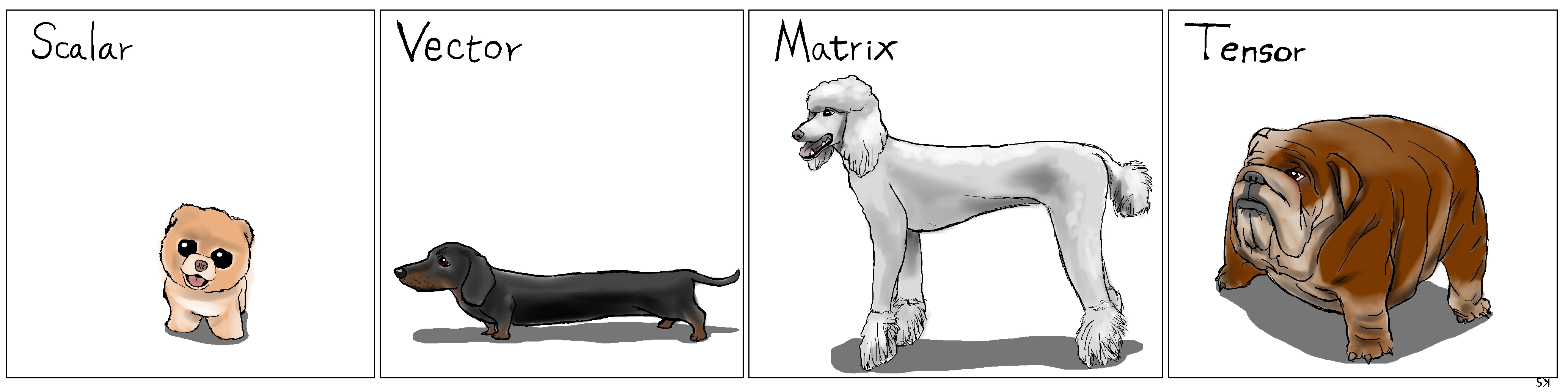

a zero-dimension array would be called a scalar;

>>> np.zeros(()) # the empty tuple array(0.) -

one-dimension would be a vector;

>>> np.zeros((5,)) # or np.zeros(5) array([0., 0., 0., 0., 0.]) -

two-dimension a matrix;

>>> np.zeros(5, 5) array([[0., 0., 0., 0., 0.], [0., 0., 0., 0., 0.], [0., 0., 0., 0., 0.], [0., 0., 0., 0., 0.], [0., 0., 0., 0., 0.]])

In the general case, we call such a structure a tensor.

Image credit: http://karlstratos.com/#drawings

Well, yes, that’s all, of course. But there’s more.

While it is true that we could train neural networks with NumPy arrays (it is really not complicated – but out of topic here), the point of libraries like PyTorch is to bring more than what NumPy already provides.

But to start with, PyTorch provides all what NumPy does. So a NumPy array is (like) a PyTorch tensor.

>>> import torch

>>> torch.zeros(())

tensor(0.)

>>> torch.zeros(5)

tensor([0., 0., 0., 0., 0.])

>>> torch.zeros(5, 5)

tensor([[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.]])

« Previous | Up ↑ | Next »